Loader Parameters Tab:

This tab allows you to define specific attributes related to how the data is loaded into the Data Source. The available options on this tab will change depending on the Loading Method chosen under the General Tab.

Delimiter:

- Purpose: This option allows you to specify the delimiter that separates fields in the source file.

- Default Value: If you’re loading data from MS Excel or other text files, the default delimiter is usually Tab Delimited (‘\t’).

- Custom Delimiters: You can select a custom delimiter if your source file uses a non-standard delimiter (e.g., comma, semicolon, pipe, etc.).

Examples of delimiters:

- Comma (‘,’): Commonly used in CSV files.

- Semicolon (‘;’): Common in some European CSV files.

- Pipe (‘|’): Used in certain custom file formats.

Number of Rows to Skip

- Purpose: This option allows you to specify how many rows in the source file should be skipped before the actual data is loaded. This is typically useful for skipping header rows, comments, or metadata that are not part of the actual data.

- Typical Use: If your file has header rows (e.g., column names), you might want to skip the first few rows.

Example:

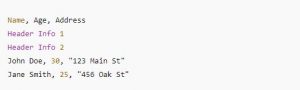

-

If your file looks like this:

You might want to skip the first two rows, so you’d set this value to 2.

Data Source Instance :

In a data management or ETL (Extract, Transform, Load) system, a Data Source Instance refers to a specific snapshot of a dataset, captured at a particular point in time. The instance is identified by two main attributes:

-

ASOFDATE: This is the date and time for which the data represents. For example, if you are working with historical data or generating reports for a specific period, the ASOFDATE helps to define the exact “point in time” the dataset corresponds to.

-

Instance Keys: These are additional attributes you can define to differentiate different versions or snapshots of the data. These can be used as version identifiers or tags for different states of the data.

Instance Keys Tab (Versioning Mechanism)

The Instance Keys tab is part of a versioning mechanism that lets you specify unique identifiers for different versions of the data. By tagging the data with these instance keys, you can track changes over time and make it easier to reference specific versions of the dataset, which is useful for auditing, version control, and ensuring consistency in reporting.

Execution Date vs. As of Date

-

Execution Date: This is the actual date when the data is loaded into the system. This could be the current date or the date on which the data load process was executed. It refers to when the data was imported or processed.

-

As of Date: This represents the date for which the data is being reported or analyzed. For example, in a financial report, the as-of date might be the last day of the quarter, even if the data is loaded into the system on a different date.

Example:

- If the data is loaded today, March 2nd, 2025, but the report needs to reflect the data as of February 28th, 2025, the Execution Date would be March 2nd, while the As of Date would be February 28th.

Stream Key

A Stream Key is a mechanism for creating a subset of data based on specific filtering conditions (typically using the IN condition). It allows you to stream or process specific data based on selected values for one or more columns. This helps in managing large datasets where you only need to process a subset of the data rather than the entire dataset.

Key Features of Stream Key:

- Subset of Data: Stream Key allows you to define and work with a subset of data based on specific values.

- IN Condition: The subset is often defined by the IN condition, allowing users to filter data by one or more values within a column. For instance, you may choose to stream data only for certain customer IDs, product categories, or geographic regions.

Example:

- If you have a dataset of sales records and you only want to load data for certain products (e.g., Product IDs 101, 102, and 105), you can define a Stream Key that filters the dataset based on these values.

- Stream Key =

Product ID IN (101, 102, 105).

This allows for more efficient processing, especially when dealing with large datasets, as it limits the amount of data that needs to be loaded or processed at once.

Leave a Reply